by Oscar Buynevich

The Cambridge Social-Decision Making Lab (CSDMLab) has a Misinformation Susceptibility Test (MIST) that purports to test people’s susceptibility to so-called “misinformation.” Researchers at the lab have used the results of the test to portray younger people (millennials and Gen Z) and conservatives, who tend to rely less on mainstream media sources for news, as particularly susceptible to misinformation.

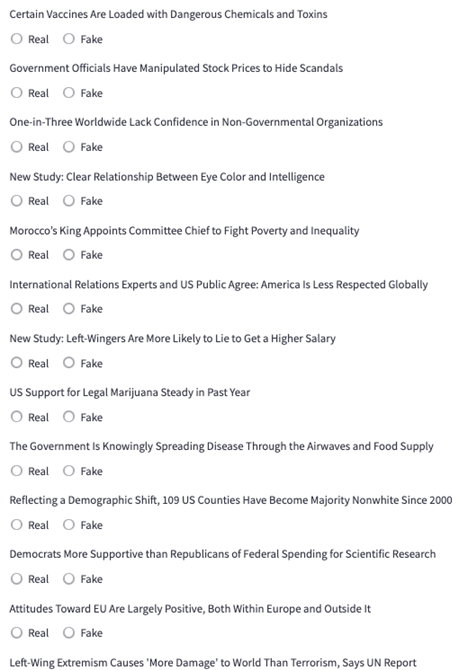

The test uses a survey with headlines and asks participants to choose if they are real or fake:

The MIST takes only 2 minutes to complete, but participants are given no context other than a headline to ascertain what is supposed to be “fake” or “real.”

Cambridge indicated that “real” headlines in the survey were taken from sources such as Pew Research Center and Reuters. The “fake” headlines were developed by researchers using ChatGPT. The researchers at first said that they used the AI tool to create fake headlines that looked and felt real in an “unbiased way.”

From the University of Cambridge:

“To create false but confusingly credible headlines – similar to misinformation encountered ‘in the wild’ – in an unbiased way, researchers used artificial intelligence: ChatGPT version 2.”

While this makes it seem like their choice of headlines was free of human bias, they admit that their original, much larger set of ChatGPT-produced headlines were narrowed down by “an international committee of misinformation experts.”

“For the MIST, an international committee of misinformation experts whittled down the true and false headline selections. Variations of the survey were then tested extensively in experiments involving thousands of UK and US participants.”

“Experts” dedicated to the work of countering so-called “mis- or disinformation” often belong to the highly partisan censorship industry – their work has been documented to target populist viewpoints in particular, and speech that espouses skepticism in government.

After their study of MIST participants, Cambridge reported that “Democrats performed better than Republicans on the MIST, with 33% of Democrats achieving high scores, compared to just 14% of Republicans.” Cambridge also reported that a MIST survey showed those who consumed “legacy media” such as “Associated Press, or NPR.”

The Social Decision-Making Lab has in the past been highly preoccupied with targeting conservatives and conservative viewpoints:

- Targeting U.S. conservatives: Meta-analysis reveals that accuracy nudges have little to no effect for US conservatives.

- Targeting “climate change skeptics: ”Climate of conspiracy: Meta-analysis of the consequences of belief in conspiracy theories about climate change; Responding To Climate Change Denial.

- Targeting “vaccine skeptics:”Prebunking messaging to inoculate against COVID-19 vaccine misinformation: An effective strategy for public health.

- Targeting “election deniers:”Democratic Norms, Social Projection, and False Consensus in the 2020 U.S. Presidential Election.

The study also finds that younger people and people who spend a lot of time online are more likely to fall for “misinformation” – both factors that tend to correlate with shunning the mainstream legacy media.

From the University of Cambridge:

When it came to age, only 11% of 18-29 year olds got a high score (over 16 headlines correct), while 36% got a low score (10 headlines or under correct). By contrast, 36% of those 65 or older got a high score, while just 9% of older adults got a low score.

CSDM Lab’s Partnershps

Under leadership of Sander van der Linden, the Social Decision-Making Lab has been at the forefront of research into psychological inoculation, or “prebunking.”

Van der Linden describes the technique as follows:

Prebunking, trying to preemptively protect people before [the] damage occurs, is much more effective than debunking. So what the media described as a psychological vaccine against fake news is really based on the psychological theory of inoculation. So just as injecting yourself with a weakened dose of a virus triggers the production of antibodies to help protect you from future infection you can do the same with information by preemptively injecting people with a severely weakened dose of fake news.

Cambridge has worked with not only the U.K. government, but also for the now-notorious censorship agencies of the U.S. government – the Department of Homeland Security’s Cybersecurity Infrastructure and Security Agency (CISA), and the State Department’s Global Engagement Center (GEC).

The GEC worked closely with the CSDMLab, paying $275,000 in U.S. taxpayer dollars to create a counter-disinformation video game called Cat Park. FFO published an analysis of a leaked State Department memo in November 2022 that detailed plans to roll out the video game in schools globally to “inoculate” young people against populist news content especially “ahead of national elections.”

Cat Park served as the predecessor to Harmony Square, another video game which the CSDMLab created with GEC and CISA in 2020. CSDMLab personalities, including director Sander van der Linden, wrote in Harvard’s misinformation journal that the Harmony Square game would serve as a “psychological vaccine” against “political misinformation especially during elections.”

They additionally touted how their game expanded upon CISA’s counter-disinformation frameworks. At this time, CISA was involved in organizing a public-private election censorship machine that throttled Trump-supportive speech and individuals online.

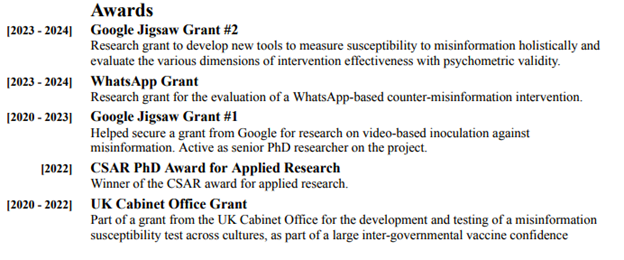

The Social Decision-Making Lab’s biggest private sector partner is Google Jigsaw, a unit of the tech giant dedicated to “counter extremism” and psychological inoculation work.

FFO director Mike Benz documented Jigsaw’s ties to government influence operations and their counter-misinformation work in November 2023. Numerous researchers involved in the creation of MIST and the MIST study are involved with Jigsaw.

While speaking about the MIST study, CSDMLab’s Rakoen Maertens pointed the finger at social media usage for making young people more susceptible to misinformation. He called for new “media literacy” initiatives to be developed when discussing the findings.

Maertens’ own CV reveals that he received two separate grants from Jigsaw for counter-misinformation work.

The CV also reveals a grant from WhatsApp, Meta’s instant messaging platform and a major area of focus for the censorship industry, which has developed methods to monitor and censor seemingly private WhatsApp discussions.

– – –

Oscar Buynevich is a researcher at the Foundation for Freedom Online.